Unity Technologies

Mixed and Augmented Reality Studio

With MARS, we are trying to merge the virtual and real worlds as much as we can. After all, to the computer, it's all the same thing.

Quick Summary

NAME

Unity MARS

TYPE

Product in market

USER

A Unity user who wants to make AR

CHALLENGES

Disparate sensor data

Reality is unpredictable

Build times are too long

Designing for unknowns is extraordinarily difficult

PROJECT LENGTH

Ongoing

THE PROCESS

In 2017, we decided we needed to turn our focus on tooling for augmented reality, both phones and HMDs. Although we knew that the AR and VR would eventually share most sensor+ML tech, it was clear that augmented reality would be paving the way for novel cameras, on-device computer vision and object awareness, semantic understanding, depth information, and whole host of other info we bucket under the category 'world data'. It also includes creative challenges far beyond the relatively predictable world of virtual reality.

We began research a few different ways. We visited companies working on headsets to understand their current pain points, then went back and started to play around with what potential solutions might look like in the Editor.

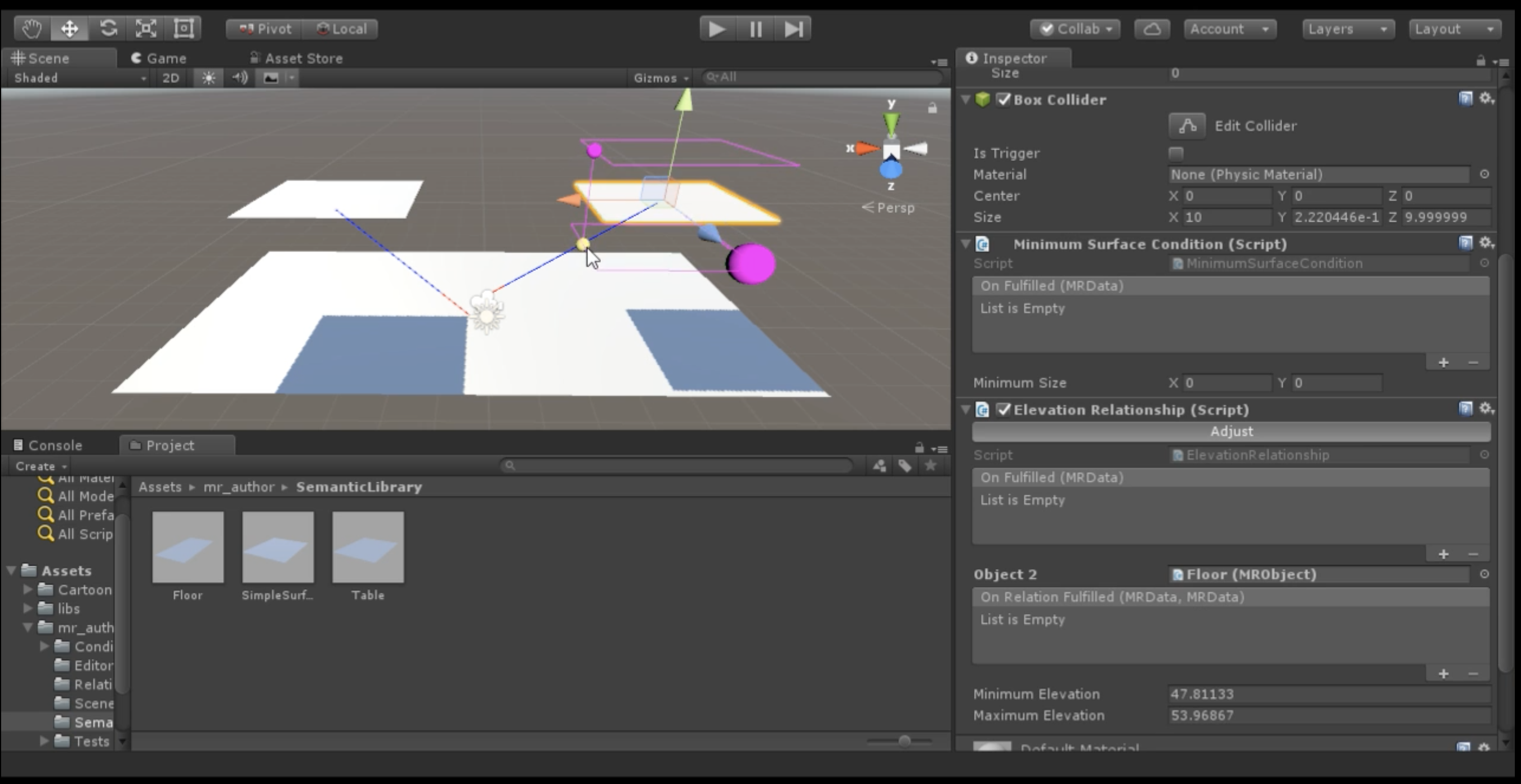

Left: early prototype of elevation and distance conditions in the Editor used to bring the hot dog demo to life.

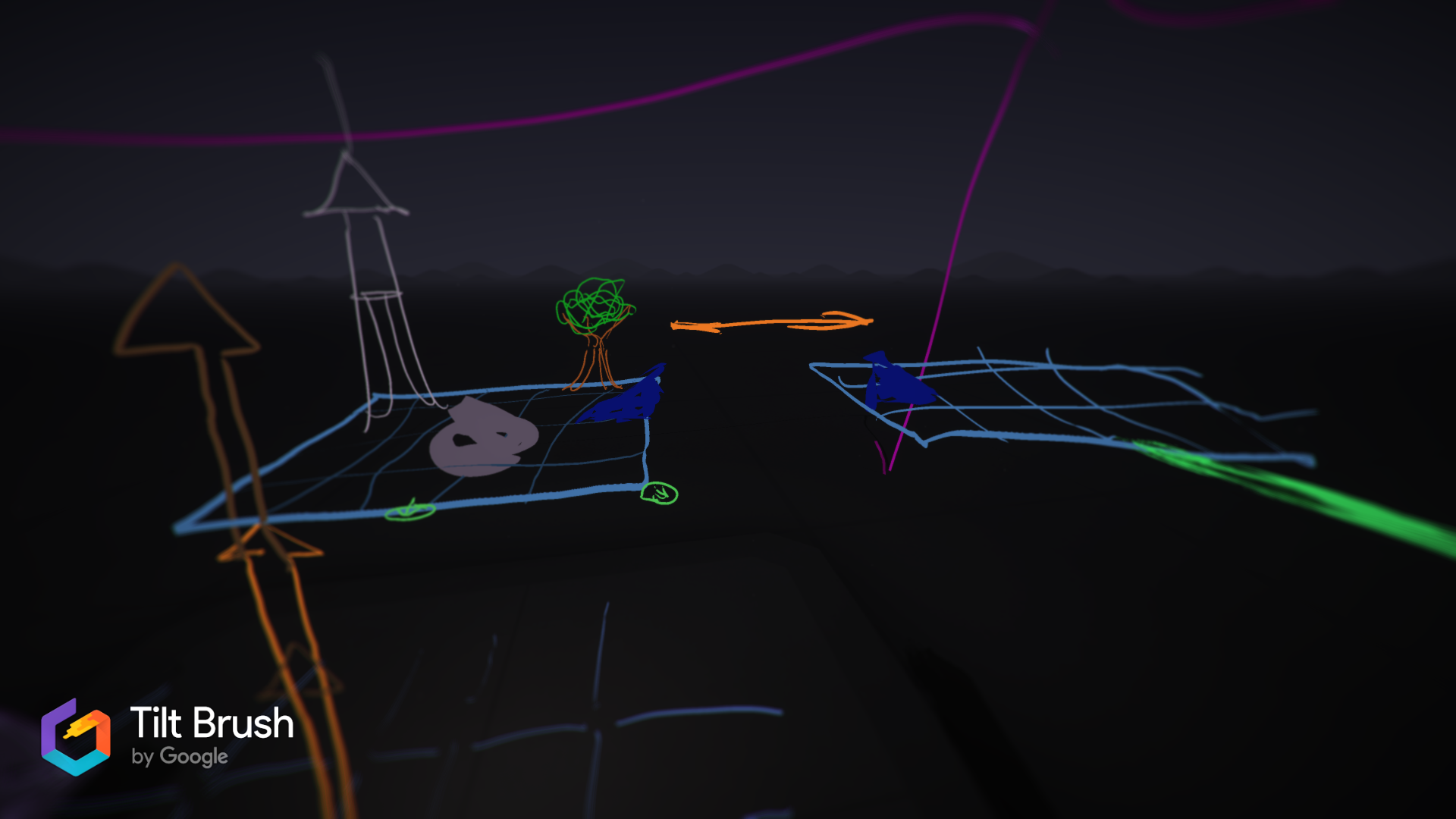

Above: Mapping out an example AR demo in Tiltbrush, where a digital hot dog truck jumps between two real desks at the office.

We also started talking to companies interested in AR, or actively working in the space. All told, we ended up talking to over sixty companies during the first year of development to gather requirements, from AR hardware manufacturers, industrial companies, media and entertainment makers, studios and agencies hired to make AR content, and companies building out their own world data features: meshing, object recognition, hand, body and face tracking, customized hardware, and many more.

From this, we came up with a key set of problems that led to requirements for MVP.

THE RESULTS

Problems

- Developers can't predict real-world events, user behavior, or location variations for their apps to run consistently.

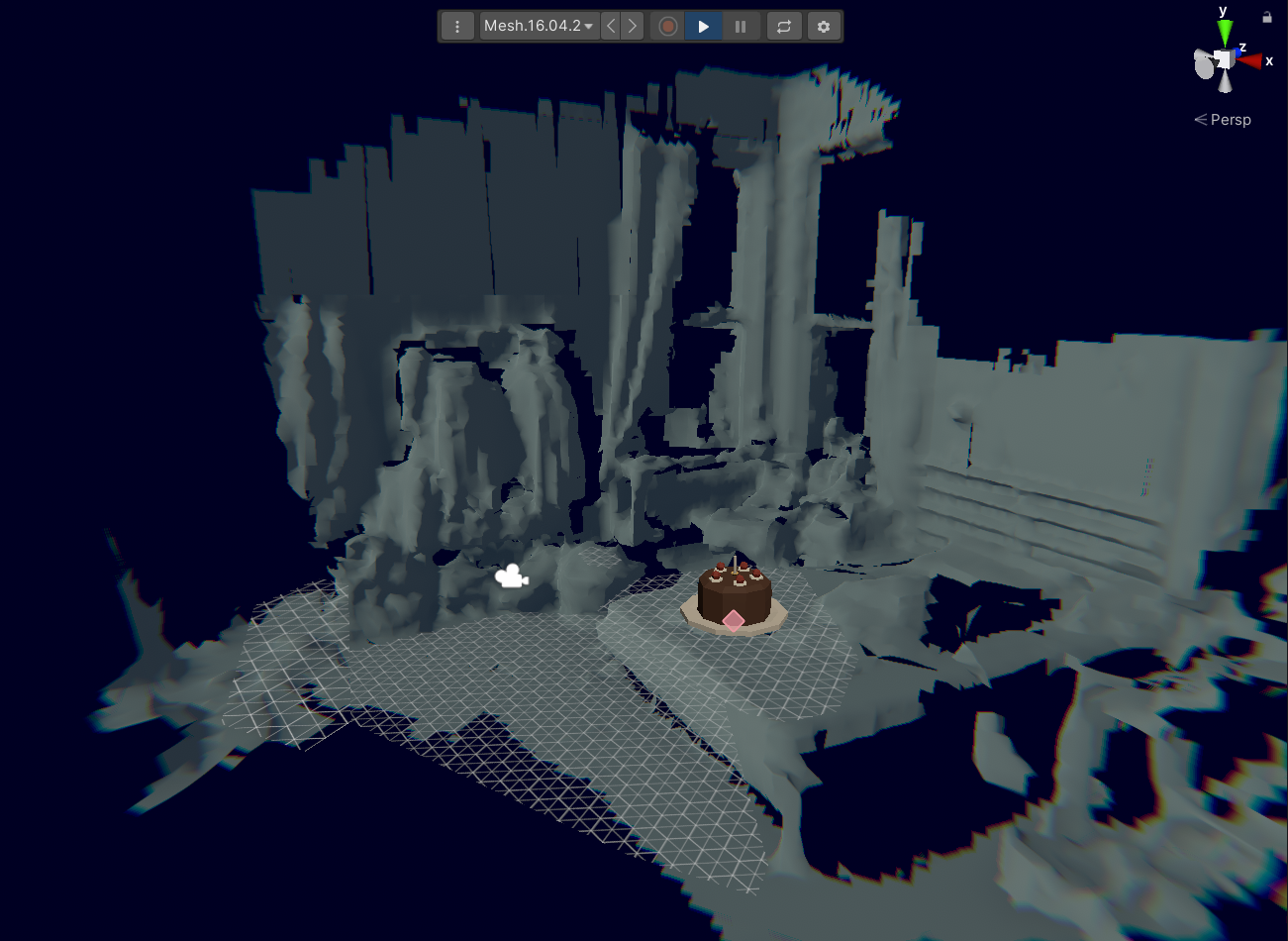

- We have imperfect information about the space, no matter how thoroughly it's scanned.

- The real world is constantly changing.

- Building and testing is difficult: need to be able to edit and test various locations and different environments in the Editor itself.

- It's hard to design for the incredible number of variables in the real world.

- Users need to be able to make AR at the location, in the space, or in AR itself.

Requirements

- Create a system that would work both in the editor, and on the apps, that can allow users' experiences to be as flexible and elegant as possible across unpredictable conditions.

- Give users the ability to create flexible layout systems and fallbacks to account for varieties in shape and size.

- Continually update world information and adjust the app sensibly.

- Allow for simulated, recorded, and real-time world data to run directly in the Editor for users to develop against.

- Create an easy workflow for users to quickly get to good with making complicated AR apps.

- Create companion creation apps that run on AR devices.

Features

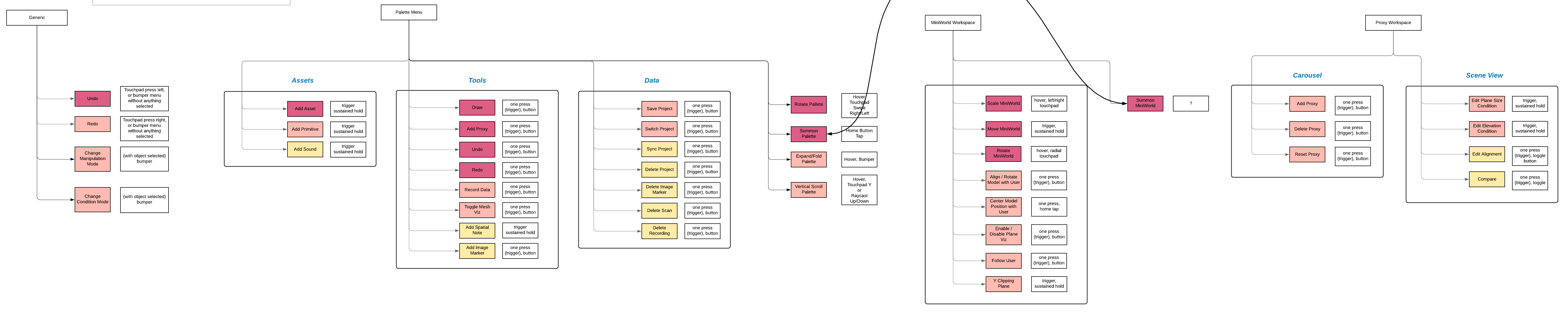

- The Proxy & Conditions system allows users to define parameters of flexible behaviors & events against real-world conditions.

- Landmarks and Forces allow devs to dictate where objects should go, based on the real environment.

- The Solver takes in all available information and continually updates the app's behavior based on the conditions, landmarks, and forces.

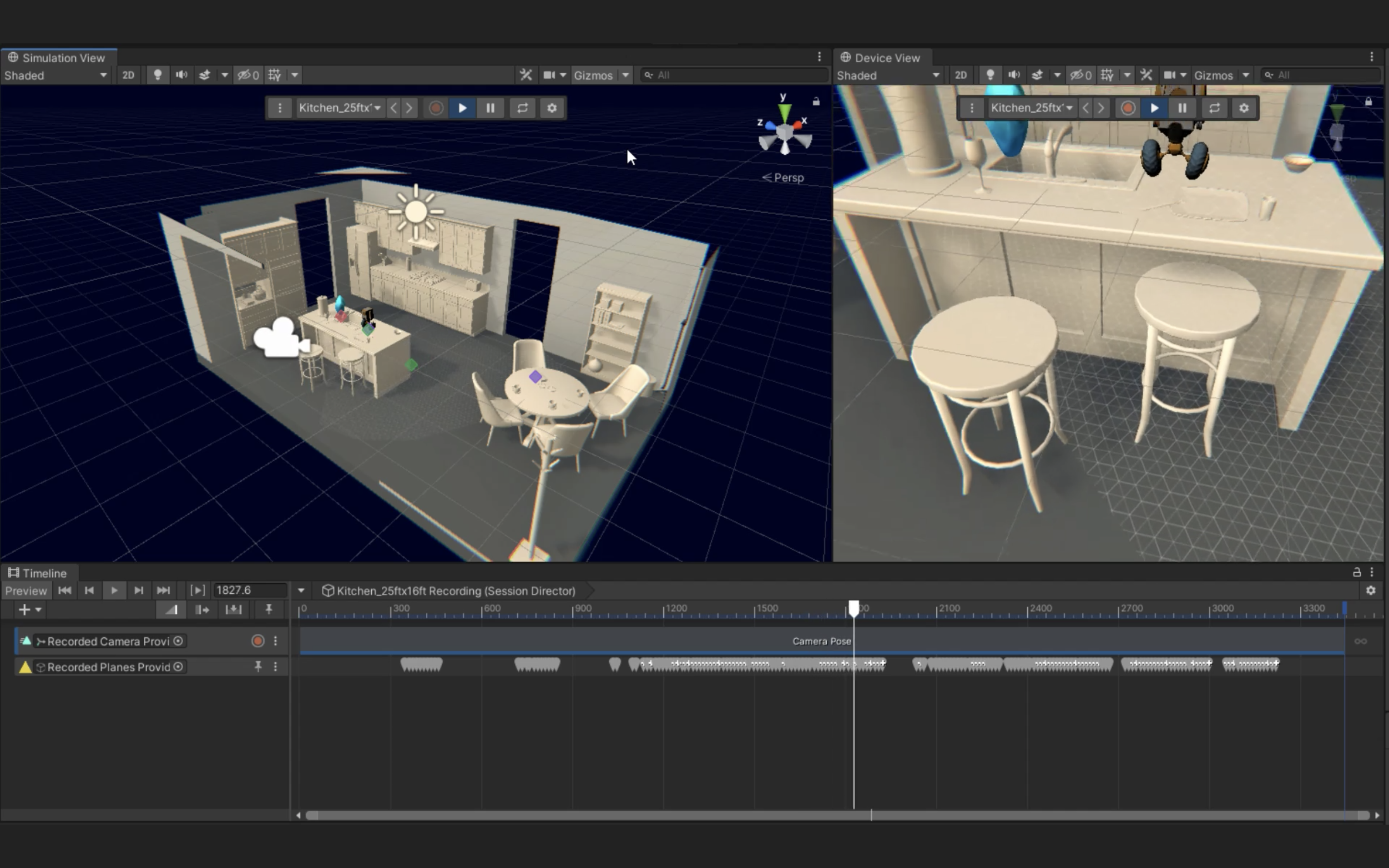

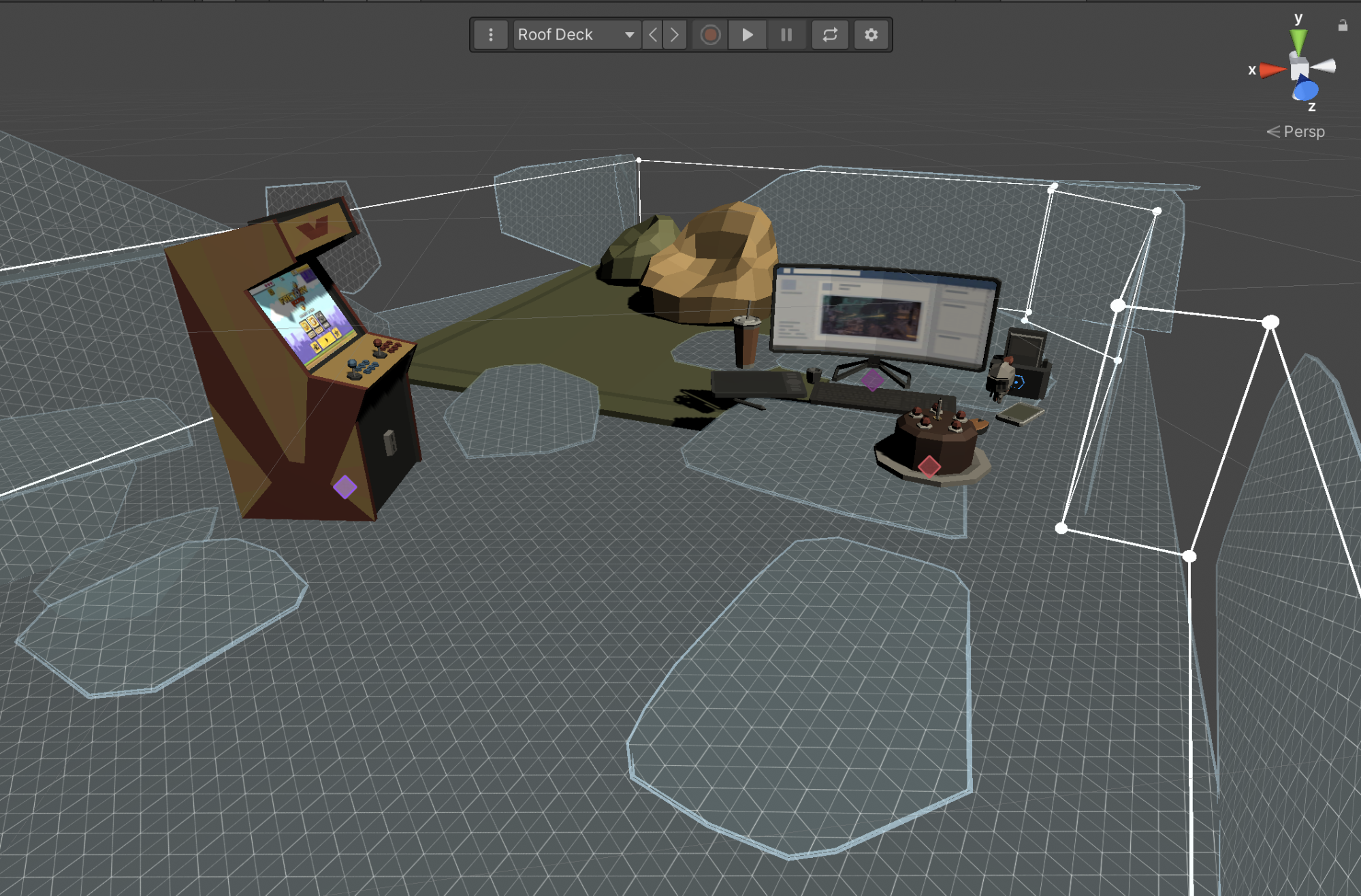

- The Simulation View is a new view in Unity that simulates reality, allowing users to test their app against a simulation, recording, or scan of real-world spaces and objects.

- The Rules workflow lets you design using a plain-language UI, right in the Editor.

- The Companion Apps allow anyone to use an AR device to lay out their app, record video, map out a space, or record AR information to sync back to their Unity MARS project.

FEATURES IN ACTION

Here's what Rules looks like, running in the Editor, alongside the Simulation view. (Read more in the blog post here.) Rules allows you to author semantic concept of conditions and proxie:. "On every [real world object], do x". It's important to note this system is data-agnostic: it can work in many types of situations, some not conventually bucketed under AR. For example, "When you hear a wake word, turn on the smart speaker," "When you see a face, unlock the door" or "When you see a stop sign, slow down the car."

Below, you can see an example of Forces in motion, defining the space between the UI so that it always elegantly repositions itself, taking both the environment & other UI into account. (Forum post here.)

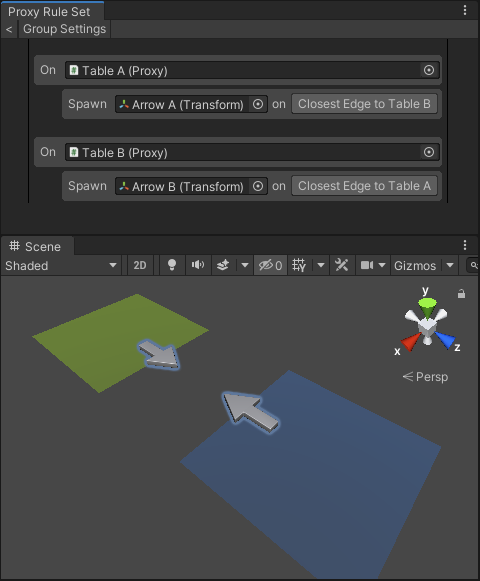

Here's an example of Rules + Landmarks. The user is defining how a bridge should be set up between two tables—planes of a conditional size—once the match is found.

The Simulation View does more than just pretend to be the real world. You can also simulate both the user moving around, what real-world understanding the device would find, and record and play back the movement on the timeline. (Blog post here.)

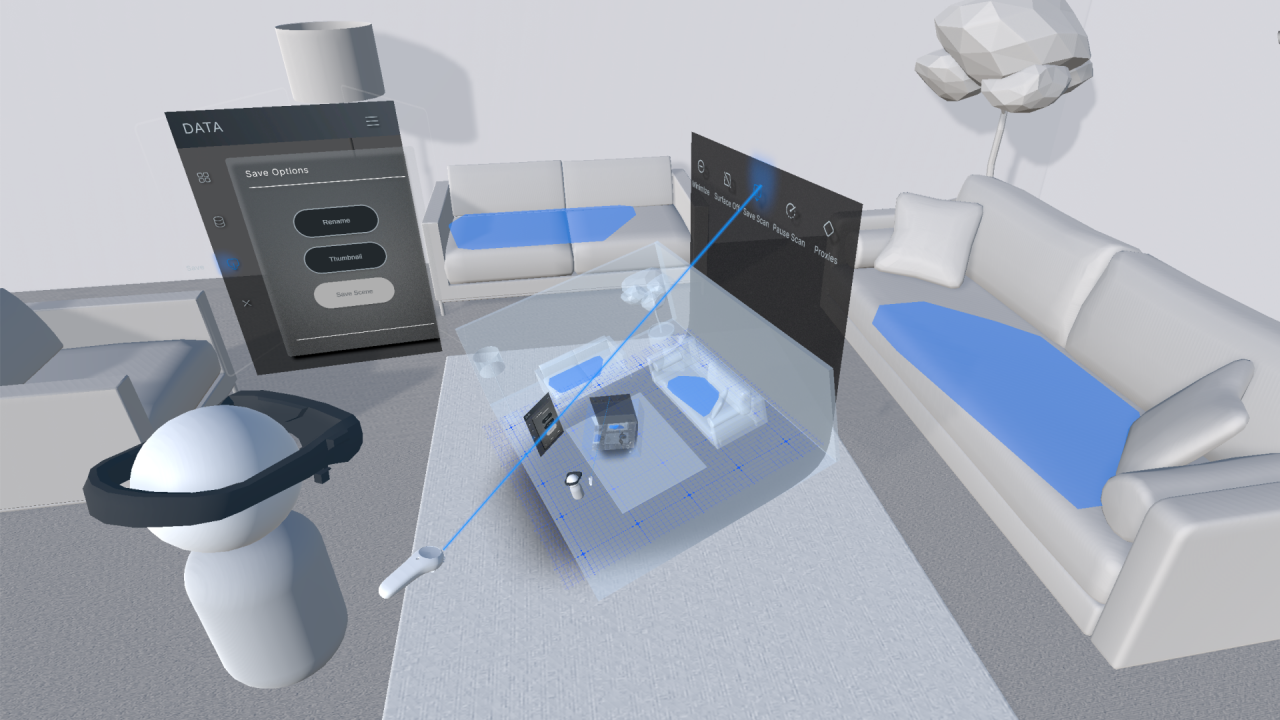

THE COMPANION APPS

There are many computer tasks that are blissfully, delightfully easy to do with a conventional display, mouse and keyboard: navigation constrained 2D UI, typing really, really fast, and zooming around a cursor via a detached peripheral input, making full use of Fitt's law for pixel-level precision using our forearms and wrists.

However, we make tools for apps that react to the world. That means we need creation tools for spatially aware devices. This is where the companion apps come into play.

We take the approach that phones and HMDs are different mediums of their own, and each have their strengths.

- Phones are effectively a display + controller packed into one: what you lose in occlusion by fingers tapping on the display, you gain back with the phone being a real-time feedback loop for recording for video, AR data, and relative movement, as well as gyro and velocity information.

- HMDs are different. The display and the controller are decoupled, and so there is more room to reason about a space without having it be the direct point of interaction.

Both have the advantage of in-situ authoring, which is just a fancy way of talking about information in context.

ARKit scan in simulation view

Magic Leap scan in simulation view

The HMD versions of the companion apps have significant design challenges beyond that of even conventional professional XR development tools, because in the end, the experience they make must be finished in Unity to be built & published to an app store.

My ask for the design team: "We need to make something useful enough that people will put on the headset every day to use it." This involved the creation of a new, AR HMD-focused design language, based on 'light as a material', and a significant amount of research and prototyping. You can read the spatial design team's blog post about their process here.

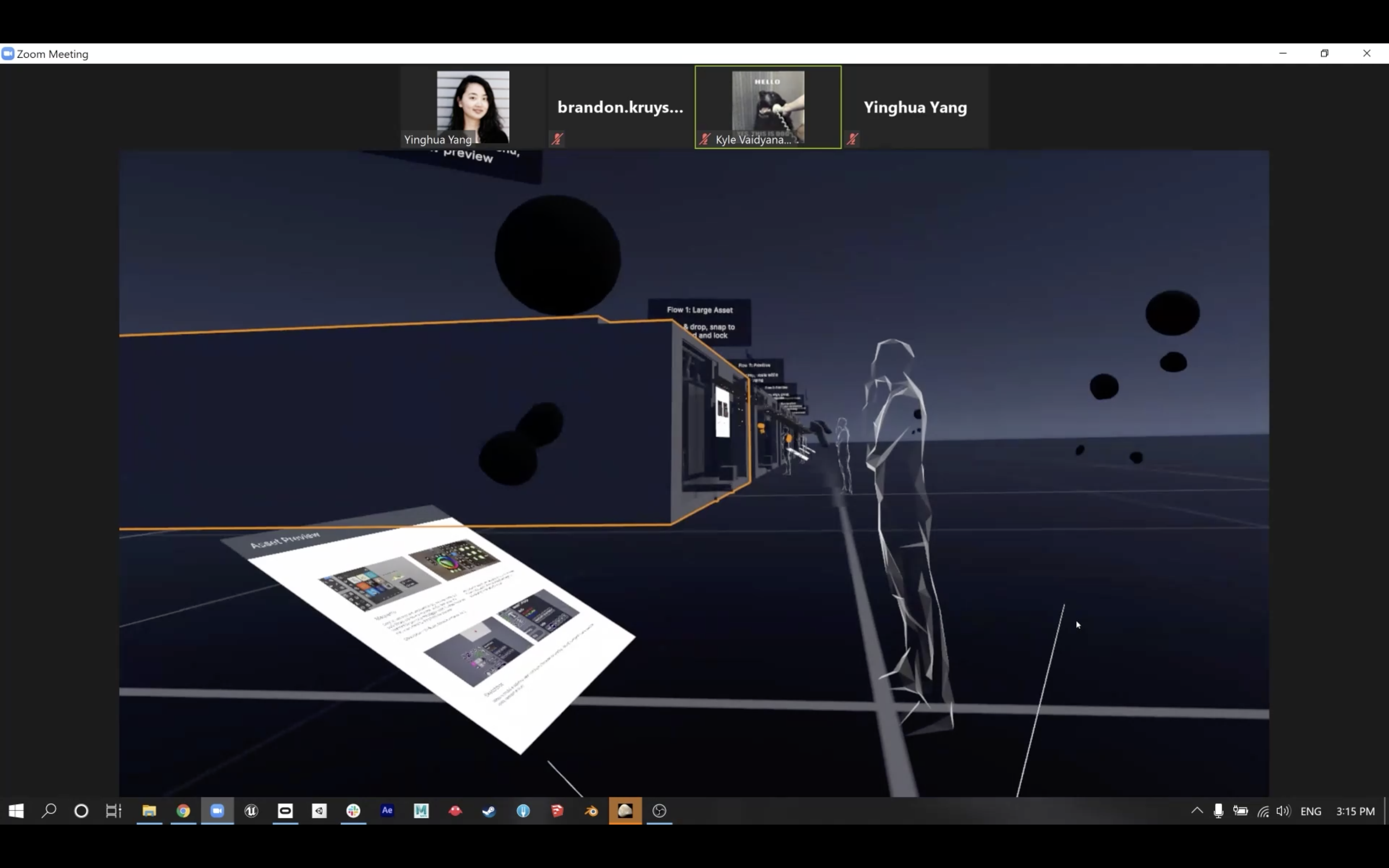

One extremely cool aspect of creating XR in XR is that we can re-use old tools. Here, we're doing a spatial sync in VR with a build that uses EXR as the base, with the new UI on top, and Normcore for networking.

The HMD apps contain the best subset of features that we identified for the mobile app. The core, of course, is recording back the data we get from the devices, and allowing our users to create directly against it. AR HMDs provide an advantage with direct authoring on the device, with all the limitations at hand. The disadvantage, of course, is that you're fighting for screen space between the app you're making, and the UI needed to make it. Using hand tracking alone, as the solo input, is also remarkably challenging for authoring in space.

VR provides a nice balance in that it allows you to design for both VR and AR at the same time. We've worked with Tvori to make an AR HMD emulation shader to give us a sense of what the UI will look like in the limited FOV. The biggest disadvantage is simply the disparate controller schema; what you can see is only one part of an app, and often you need to test the right controllers with the right device to really understand if you're making the right design decision.

Speaking of inputs and visuals: context is important, especially for new users. Here, we have two videos for comparison: the first, on the left or top, is an example of initial-stage tooltips for an AR controller. This is based on work shown in the second video, one of the initial EditorXR test for tooltip placement, with a variety of controller and HMD angles, with all tooltips active, using a different set of controllers.

The MARS companion apps are still in flight—so this is a sneak peek into what we're building. We look forward to unlocking a new way for anyone to author for interactive spatial computing, using contextually aware devices, in the space. The future is here, even if the nomeclature is still confusing.