PROTOTYPES & EXPERIMENTS

Facial AR Remote

Quick Summary

NAME

Facial AR Remote

TYPE

Prototype

USER

Indie devs, animators

CHALLENGES

None really—as long as you have the art, it's straightforward

PROJECT LENGTH

1 month

For our proof of concept demo, we used the main character from Yibing Jiang's Windup Girl short.

AnimateVR

Quick Summary

NAME

AnimateVR

TYPE

Prototype

USER

Anyone with VR

CHALLENGES

Mapping known paradigms, like timeline, to VR's large hit target size

Easing motion trails + feel

Button states

PROJECT LENGTH

Six weeks

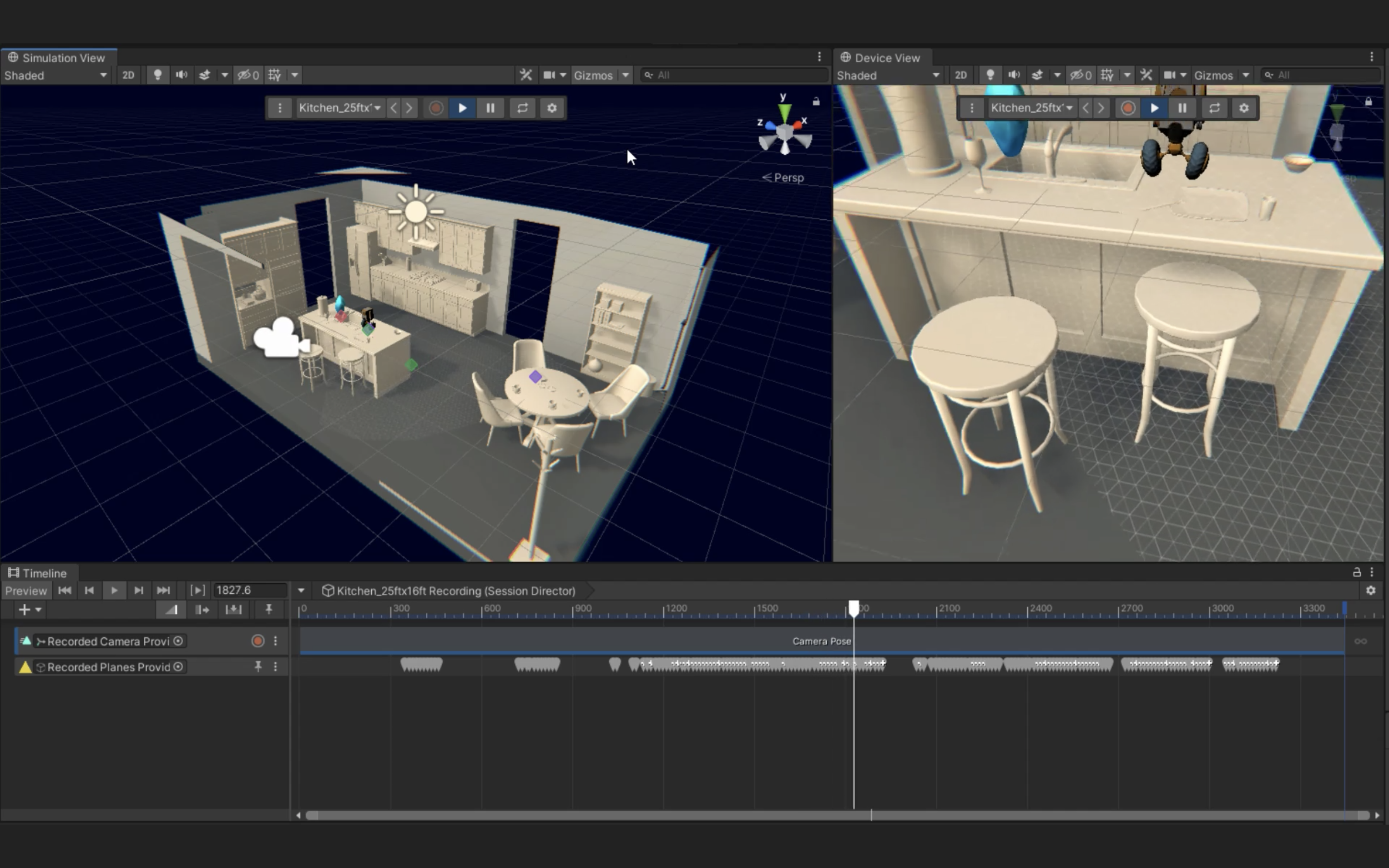

AnimateVR was our experiment into how animation could easily work in virtual reality, using a simple timeline and keyframes, and visible, editable motion trails. You really haven't been humbled by XR design until you've recorded a minute-long animation and realize that your responsive timeline UI is now twenty meters long. Here's a very rough initial version—notice the jagged motion trails.

We learned much from this project: that too many motion trails makes it impossible to reason through a scene; what type of easing we needed to make moving motion trails feel forgiving and smooth; adding in grab handle points to the trails that appear and magnify as the user's controller gets closer, to allow for easier grasping, and clearly identifing a multitude of XR-specific object states, which we codified in the Object States Grid. You can read more in the blog post here. I highly recommend it, as it mentions many great key learnings, from adding in fake physics to seperate button mappings for different object types.

Even our simple prototype quickly increased in scope; we thought keyframes were a nice-to-have, but they turned out to be essential. Also, notice how much smoother the motion trails are here.

Buttons like 'record' are unusual in that they indicate both the affordance and the mode state (eg, currently recording). We spent a surprisingly long time making the record button feel good and make sense.

We ended up re-using patterns from EditorXR, like being able to 'call' the UI to you.

ARView

Quick Summary

NAME

ARView

TYPE

Prototype

USER

Mobile phone users

CHALLENGES

Networking previously un-networked apps

Aligning worldspace and digital

PROJECT LENGTH

Four weeks

I discuss the value of mobile AR as both a display + controller in the MARS' companion apps section, but one aspect I find particularly exciting is how the phone can be used as a display into other digital worlds—for example, someone else's virtual experience. Phones can be more useful than a static, mirrored display of a VR app, because the user can move around the camera with the phone, much like how they can move around the camera with their HMD in VR.

We made ARView as a proof of concept. In order to add this to your app, it does require networking, and for 1:1 digital object relocalization, it also requires either manual or anchoring alignment. For ARView, we used manual alignment by placing the controllers on a table, then tapping on them with the phone. Simple and effective, though it doesn't account for drift.

Still, this prototype helps extend the vision that future apps will be built once and run anywhere. My hope is that most XR apps in the future have this multi-device-view capability by default.