Mental Models

& Systems

for Spatial Computing

This is an aggregate essay based on talks I gave in 2018-2019, effectively laying out the value of ambient computing within the larger context of computers as a tool for human augmentation.

TABLE OF CONTENTS

- What’s spatial computing

- My ideal morning

- Why focus on ambient computing?

- Computers are great

- Human-ing computers

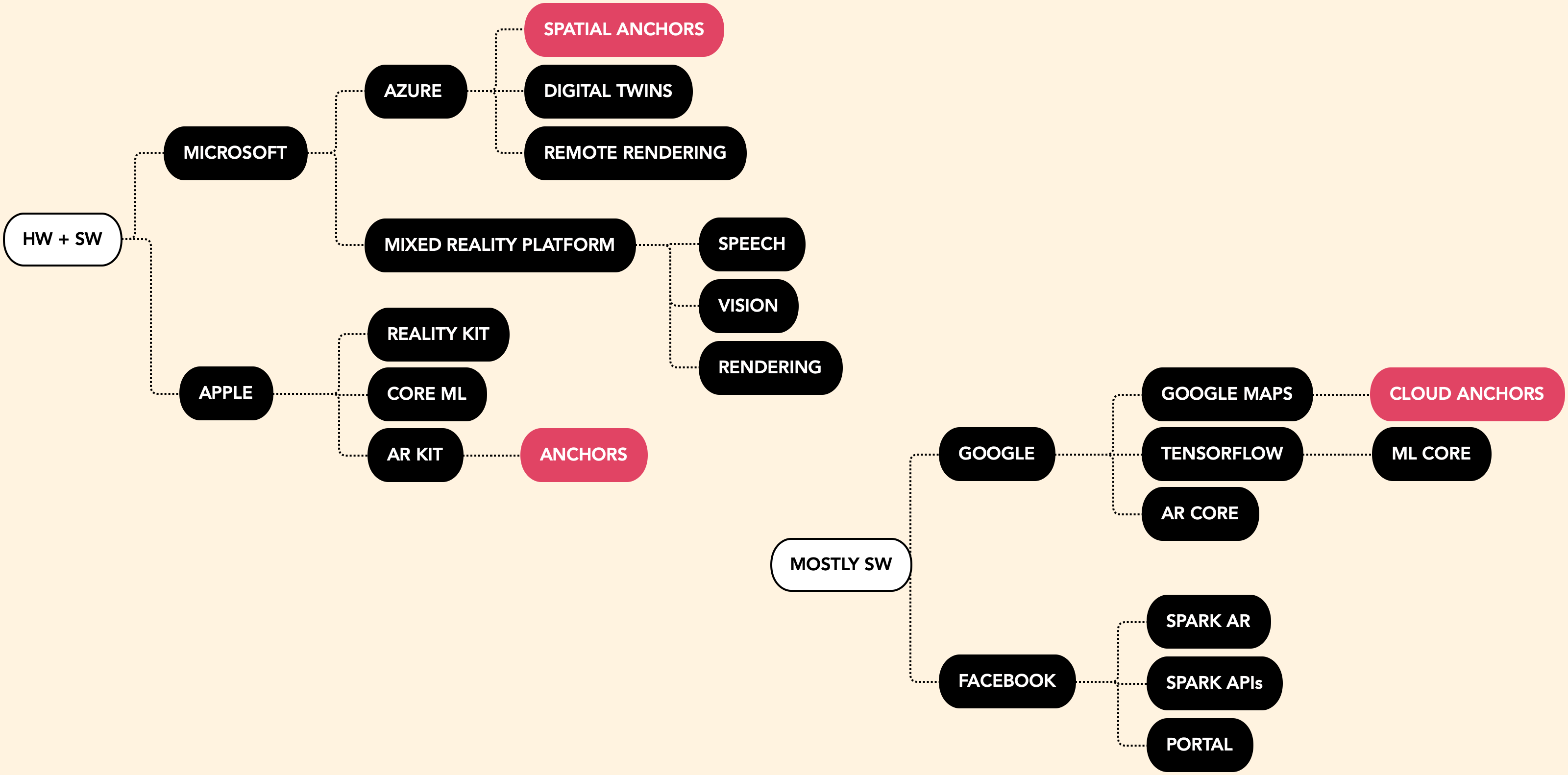

- How humans communicate with computers

Hi, I’m Timoni West. My team, the XR Tools Group at Unity, has two goals. First: figure out what we need to build today to help our community build great XR, both conventional and spatial tools. Second: think about what the future of computers will be, and what Unity needs to do to support that future.

We'll be going over the topics listed here today—check out the table of contents. But the overall theme is this: what we need to think about, and work with, in order to get to the future.

But first things first: you found this piece and you're reading it, but it may have been just to answer a single curious question:

PART ONE: What exactly is spatial computing?

Simon Greenwold defined it as 'human interaction with a machine in which the machine retains and manipulates referents to real objects and spaces.' Straightforward. But there are earlier ties to ambient intelligence: 'electronic environments that are sensitive and responsive to the presence of people.' Ubiqitous computing definitions also apply: 'computing is made to appear anytime and everywhere...can occur using any device, in any location, and in any format. A user interacts with the computer, which can exist in many different forms, including laptop computers, tablets and terminals in everyday objects such as a refrigerator or a pair of glasses.'

You can trace the concept to any number of terms: calm computing, ambient computing, those cool holograms from Iron Man, or mixed reality. I am agnostic on what you choose to call it and why, as long as we share the same goal: getting computers to be as integrated with our lives as we can. This is a simple animated example: we'll get into this more later, but the core is that any app should work with any device you have at hand, or would want to use.

Animation by Bushra Mahmood.

Of course, in order to make apps that can run on any device, we need the tools to make those apps—dealing with so many variables, capabilities, and input types extends far beyond even the challenges of different devices today. The tools are extremely important to help developers underestand the mental models required to get to something like this.

Most of the time people have a similar vision in their heads when they think about the ideal way to interact with computers—inspired by movies and TV. So let's talk about what that looks like.

Here's a few subtle gestures from Black Mirror. They're nice: natural and comfortable.

But what looks good on camera is often nonsensical in real life. Imagine having to hold up your hand in this pose to take a phone call—or have someone nearby and press arms together to do a joint video call.

Nonetheless, this is the visual language that inspires what people think is possible, or should be possible, with technology.

In fact, these gestures are engrained as part of our shared cultural literacy. We get the reference; we understand what the underlying motivation is, just like any conventional real-world gesture. We have created a sign language to communicate with the technology, in advance of its existence.

Of course, there are many implications to the tech, far beyond the basic assumption that we can interact with digital objects with this quasi-telekenetic gestural language. Below, we have Keiichi Matsuda's Hyper-Reality, an exploration not only of a truly integrated digital world, but the implications of the social capital and economy that could tie into such a world.

It’s worth noting here that the dystopian element in this video isn’t really the augmented reality part—although that is overwhelming. But the dystopian element is that computers are not only uncomfortable and poorly designed, but the social and real capital for your entire life.

Although this is a good cautionary tale it has little to do with augmented reality itself. If you ask the average person what they’d want to do with a mixed reality computer, most just want basic, cool things—we all want to play Minecraft in our house. And that’s actually what’s being built.

Here’s the thing, though. I looked for a while, and I couldn’t find a really good example of spatial computing anywhere: not in movies, not in books, not concept videos. So in lieu of time to make a concept video, I decided to walk you through an average morning for me in my ideal world in which we have really nailed what I'll call "spatial computing". You can walk through it here. You can also just move on to the systems, with How Humans Communicate with Computers.