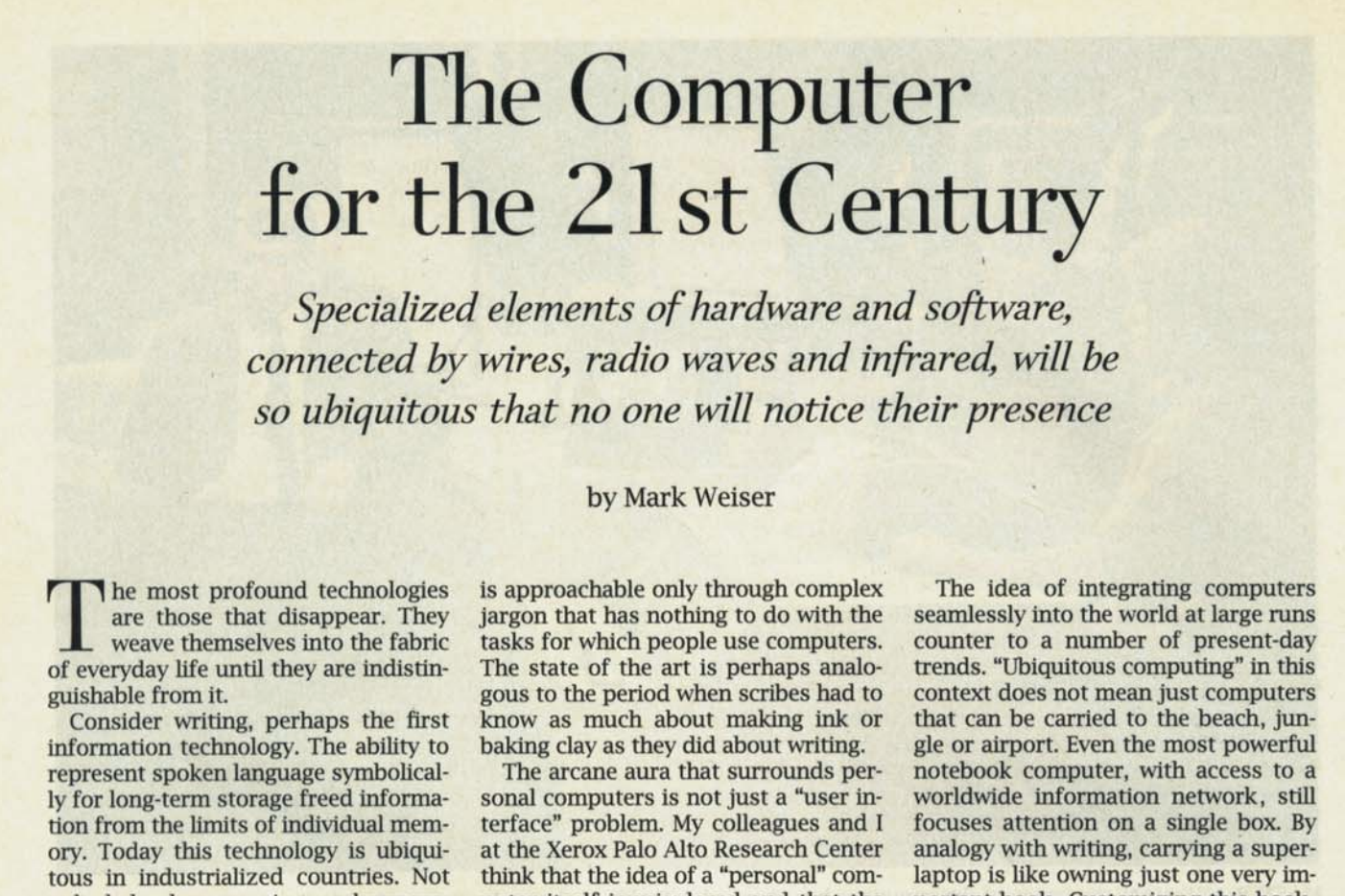

How Humans Communicate

with Computers

ON THIS PAGE

• Cheat sheet

• I/O Loop diagram

• A dive into each modality:

• Visual

• Auditory

• Physical

We talk to our computers all the time—from a smile to a frown, a quiet 'thank you' or profanity, and any number of gestures. But in practice, there are only three official channels that are widely recognized by both humans and computers.

These are the three major categories of input and output in computing: visual, audio, and physical. Combined, the I/O pairing is known as a modality, though the practical details are of course a bit different for how computers take in input compared to humans. We'll go over this, as well as the pros and cons for each category.

VISUAL

Visual includes gestures, poses, graphics, text, UI, screens, and animations.

AUDITORY

Auditory includes music, tones, sound effects, wake works and natural language processing, and diagetic and real-world sounds.

PHYSICAL

Physical includes hardware, physical affordances like buttons and edges, haptics, and any time a user interacts with real object.

Why do we care about these modalities? Because our users have different needs at different times. Needs can be situational, tied to their level of mastery, or simply personal prefererence. It is our responsibility to think this through for them.

DL;DR Cheat Sheet: Best Practices

VISUAL

- Best output for quickly transferring dense amounts of information

- Great output for tutorials and onboarding

- Okay input when the user has to input data and can't use voice or physical peripherals

- Poor input for fast, precise needs

- Poor input for flow states

AUDITORY

- Best I/O for ambient confirmation

- Best I/O when users are constrained visually & physically

- Great output for visceral reactions

- Okay I/O for situations where the user shouldn't, or can't, look at UI

- Poor I/O for quickly transferring dense amounts of information

PHYSICAL

- Best input for flow states

- Best for fast, precise inputs

- Best inputs for situations where mastery is ideal or essential

- Great inputs for situations where the user shouldn't, or can't, look at UI

- Poor outputs for any type information transfer beyond the basics

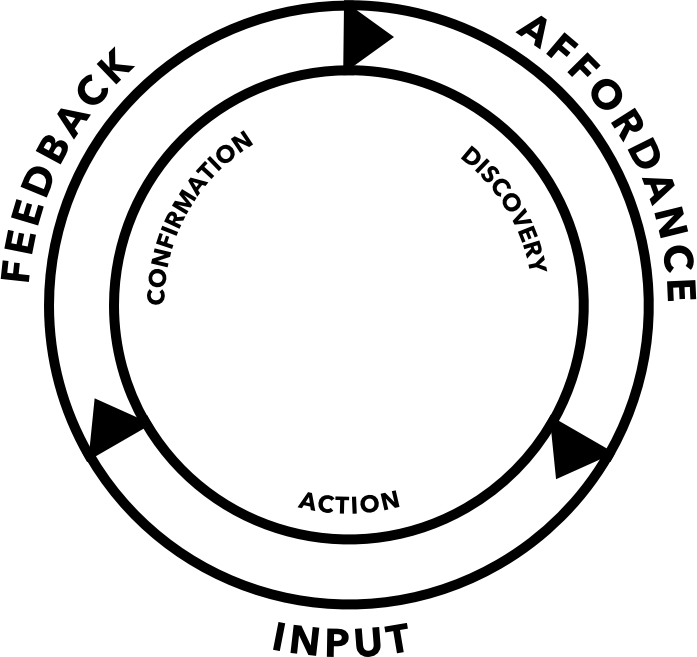

The Action Loop

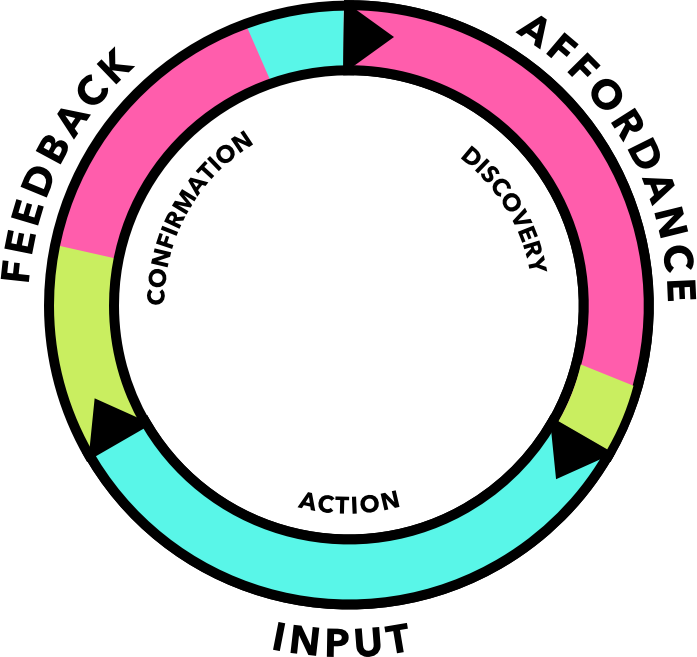

Interactions that 'feel' best are those that make use of all the modalities that are available. You can map out any action to this basic action loop. For every stage of the user journey in a feature, you should decide what modalities you want to use.

In the second wheel here, I've mapped a simple game controller action. The affordance to push the button is mostly visual (pink), with some sound cues (green). The input is simply the button press: all physical (blue). The feedback includes haptic feedback, sounds, and visual confirmation.

Keep in mind this loop will look different at different stages in the user’s life cycle and familiarity level with the experience, or ability level, or even on different devices. Start thinking about all of your design decisions this way, and your app's user experience will vastly improve. Promise.

These two top sections are enough to give you the tools to make good decisions for your apps. For more resources, check out the Object States Grid, or the Input Mapping Guide. If you'd like a deeper dive into the pros and cons of each modality with examples, read on.

Visual Inputs & Outputs

Any phone app is a fine example of a visual output. Here's some things to note:

- Most apps are designed to be visual-only, because users often turn off sound and haptics.

- Physical affordances don't actually exist on glass displays: any percieved physical affordance, like a button, has no actual physicality to it.

- Because phones are both almost entirely visual output and have major occlusion issues due to the display also being the input device, designers are leaning heavily on animations to help provide context, both about what's happening and confirming success or errors. Animations are more forgiving in that they happen over time, so the user may catch the beginning or end even if visual was partially occluded to begin with.

Visuals have a lot of advantages, which is why they are the most popular output type by far for most abled users. Here's a rundown of advantages:

- Very high-density information potential: humans can read 250 to 300 words per minute.

- Extremely customizable; unlike physical objects, there's no limit to what you can design.

- Most words, symbols, and objects are easily recognizable & understandable to humans; unlike, say, a new controller, the training has already been done for you.

- Time-independent; visuals can just hang in space forever. This makes them an excellent ambient modality.

- Visuals are easy to rearrange or remap without losing user understanding.

- Visual inputs, like gesture and pose, don't require the user to hold a device.

Of course, there are disadvantages, too:

- Visuals can be easy to miss; they are often location-dependent. If the user is looking the other way, they will simply miss it.

- Visuals require prefrontal cortex for processing & reacting to complicated information, which takes more cognitive load. It is cognitively easier to hear an 'error' tone than read the word "Error".

- Related, visuals likely to ‘interrupt’ if the user is in the flow. Having something unexpectedly stuck in front of your face is more jarring than unexpectedly hearing a noise, simply because in real life, we can usually hear things coming.

- Occlusion and overlapping is the name of the game. By definition, visuals exist in some kind of space, and that means they are often in front or behind something else.

- As input for the computer, via a camera feed, gestures and poses must be precise, repeated often, and performed within the camera's frustrum. This can be tiring.

- Very precise visual tracking, like eye tracking, is processor intensive.

Auditory Inputs & Outputs

Auditory-first inputs and outputs are only recently becoming a common type of conventional computing. Surgery rooms are a great real-life example: because the surgeon's hands and head must remain focused, the room communicates by talking. Restaurant kitchens are similar. Here's some things to note:

- The surgeon is visually and physically captive.

- The whole team needs continual voice updates for all info.

- Effectively, the communication is simply voice commands for tools or other help.

Audio is something of the unsung hero of computer outputs, although funnily enough, it seems humans have always assumed voice input would become the primary way we communicate with our devices—all the way back to the Jetsons, Star Trek, and even Metropolis. Here's a rundown of advantages:

- Humans can understand 150 to 160 WPM; this is even higher for those who train up on higher-speed audio playback, like the blind community.

- Sound is omnidirectional for both input and output, freeing you to interact with your computer from any location, and vice versa.

- Sound is easily diegetic—that is, feels like part of the world—and can be used to both give feedback & enhance worldfeel.

- Unlike most visuals, sound be extremely subtle and still work quite well.

- Like physical inputs, sound can be used to trigger reactions that don’t require high-level brain processing, both evaluative conditioning and more base brain stem reflex.

- Even extremely short sounds can be recognized once taught.

- Sound is great for affordances & confirmation feedback.

Of course, there are disadvantages, too:

- Easy for users to opt out and turn off the volume.

- There's ability to control output fidelity or assurance of the device. Compare this to an HMD or even a mobile app vs desktop.

- Audio is inherently time-based: if user misses it, has to repeat, unlike visuals, which can remain in perpituity.

- Sounds be physically off-putting (brain stem reflex)—or even painful.

- Across the board, audio is simply slower than the other modalities, for both input and output.

- Natural language processing has many difficulties, not the least of which is that humans aren't precise with language at all—never have been, and likely never will be. As a result, speech recognition is fairly processing intensive as much work needs to be done to uncover the user's intent, even after the words have been correctly identified.

- Sounds aren't as customizable as visuals. Confirmations must be short and pleasant. Anything designed to enhance worldfeel has to be at least quasi-realistic or recognizable, lest the user be confused by a steel beam that sounds like a rubber band when tapped.

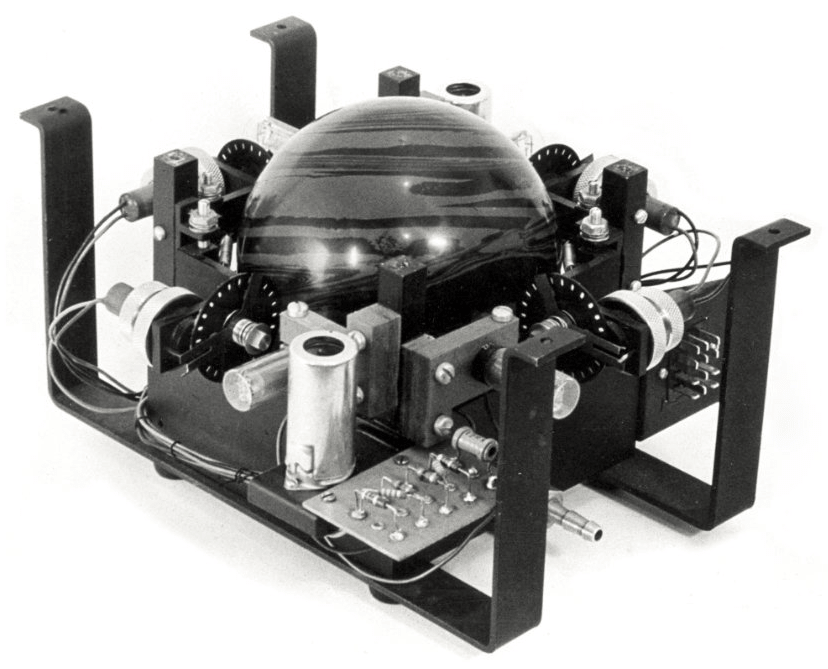

Physical Inputs & Outputs

In a sense, everything we've talked about here is physical. Light hitting your eyes, sound hitting your ears; these are physical qualities, too. But our nervous systems are wired to process them seperately—hence the five senses.

Instruments are a great example of conventional physical inputs and outputs, though most mechanical objects fit the bill: everything from vehicles to kitchen drawers.

Some things to note about the instrument here:

- Like most reed instruments, saxaphones have massive amounts of physical affordances. Buttons are designed to be as 'findable' as possible.

- As a result, no visuals are needed; the creator is in an uninterrupted flow.

- It's obviously true for instruments, but almost all physical inputs will have audio feedback—it's hard to remove entirely. If something physical moves, air is displaced, and we usually hear it.

- Yes, that is the Sax Guy from Lost Boys.

Lately, with the rise of capacitive touchscreen devices, physical inputs have gotten a serious downgrade over the less sexy, but more practical computer peripherals like mouse & keyboard. Here are the advantages to physical modalities:

Can be very fast & precise as an input, especially peripherals that allow for small, supported finger movements, like keyboards or game controllers.

- Once training has finished, muscle memory bypasses high level thought processes, so is easy to move into a physiological and mental ‘flow’. Training feeds into the primary motor cortex; eventually doesn’t need the more intensive premotor cortex or basal ganglia processing.

- Physical objects have a strong animal brain "this is real" component that adds multitudes to any visual or auditory cues. Even shaking a controller can be mapped by the brain to an earthquake onscreen.

- Lightweight feedback is unconsciously acknowledged, much like audio feedback.

- Of all the modalities, physical inputs provide the least amount of delay between affordance & input.

- Physical inputs are the best standalone single-modality input type, as they allows for the most precision.

Of course, there are disadvantages:

- Physical input can be tiring. This is largely based on how the input is designed—people can easily drive a car for hours without real physical fatigue—but an improperly designed physical input can be tiring, painful, or even damaging.

- While gestures & poses are processed by the computer as visual input, they do require physical output. It's a common misconception that 'hand tracking' is equivalent to 'the computer understands my intent.' The reality is quite different: users must learn a new form of sign language to talk to the computer for basic tasks, and must perform the sign language within the camera's frustum.

- Physical inputs have higher perceived cognitive load during teaching phase when using unfamiliar peripherals. Users tend to forget that all physical interaction was taught because so much of it takes place at a young age (like picking up a cup) or is part of the socialization process (like learning to write with a pencil). The fact is that it is much easier to use a game controller quickly and well than a pencil, which takes years to master. And yet the bias persists.

- You can mask some of the user error rate with good software, as the mobile phone manufacturers have chosen to do. But for games, mastery of the controller is part of the criteria to play the game well.

- Physical inputs are simply flexible than visual: buttons can't really be moved, we only have so many materials, etc.

- Physical inputs require require memorization for real flow.

- Physical outputs require variation due to human sensitivity. One person's 'strong' haptic feedback is weak to another.