This 'essay' is a direct rip of slides from various talks I gave in 2019, trying to simply describe a relatable use case for ambient computing. I recommend reading the introduction here first for context—but if you don't need it, just dive right in. The next section explains why I think this is important to focus on.

I looked for a while, and I couldn’t find a really good example of spatial computing anywhere: not in movies, not in books, not concept videos. So in lieu of time to make a concept video, I decided to walk you through an average morning in my ideal world, in which we have really nailed what I'll call "spatial computing".

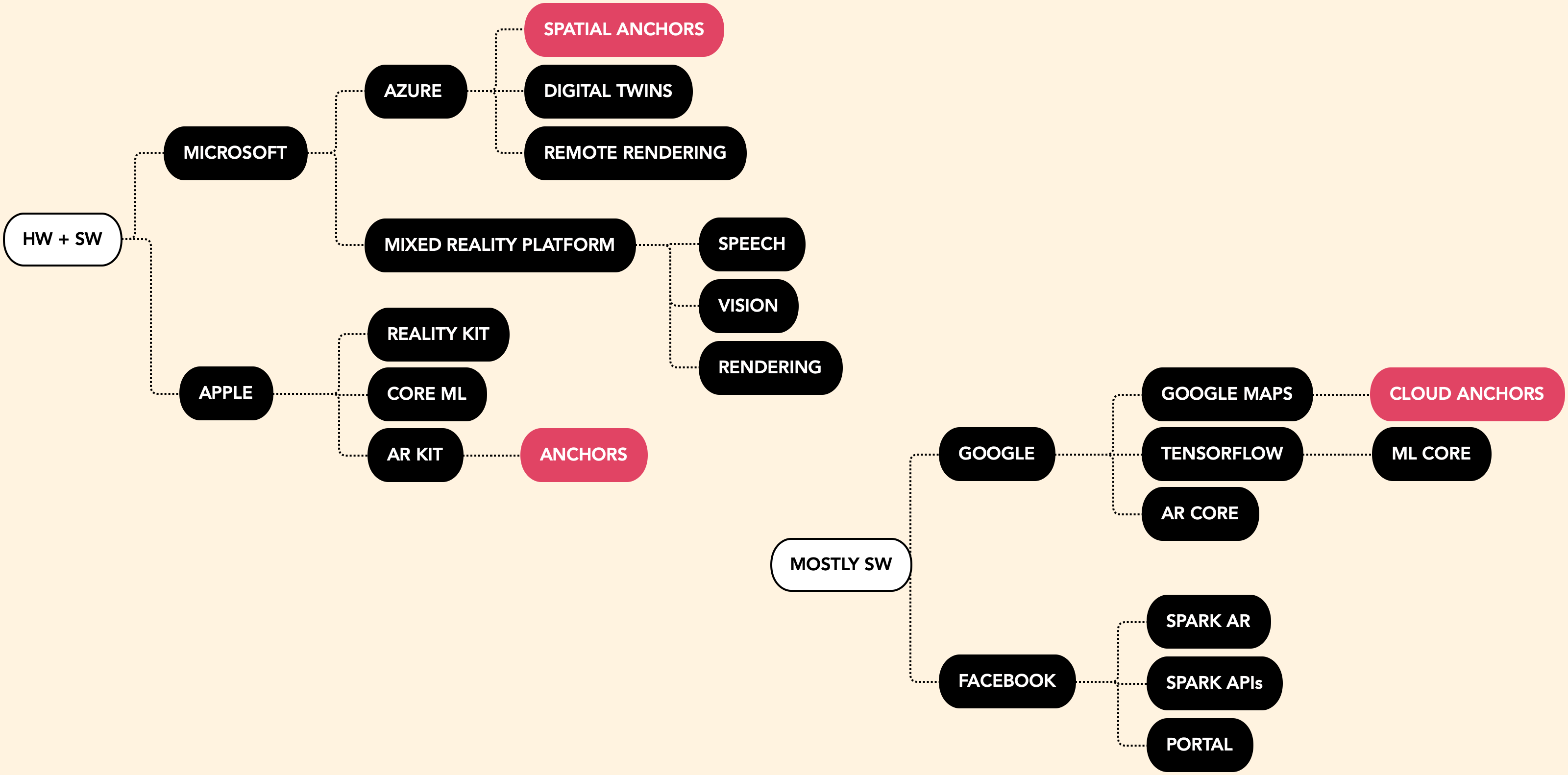

So, don’t get too hung up on the hardware or tech stack here—for most of this there are multiple solutions, all in various states of ‘emerging tech’ today. This is just a thought exercise to get us all on the same page.

This literally involves me drawing on a bunch of pictures of my house and narrating for you of what my ideal morning looks get the hardware right, if we get the software right, and most importantly, if we get the underlying systems right.

Okay. Every morning I wake up and see this around 6:30am, when my alarm goes off.

The lights are on because it’s early and it’s not bright out yet so this helps me acclimate naturally. (This is real, by the way—I actually have this set up with Hue Lights.) Except it’s 6:30 and the time change was this week so I’m going back to sleep. So I tell my alarm to shut up, or fifteen more minutes, it gives me a quick little confirmation beep and that’s that.

No visuals, but some amount of automation and voice input. Nothing too exotic.

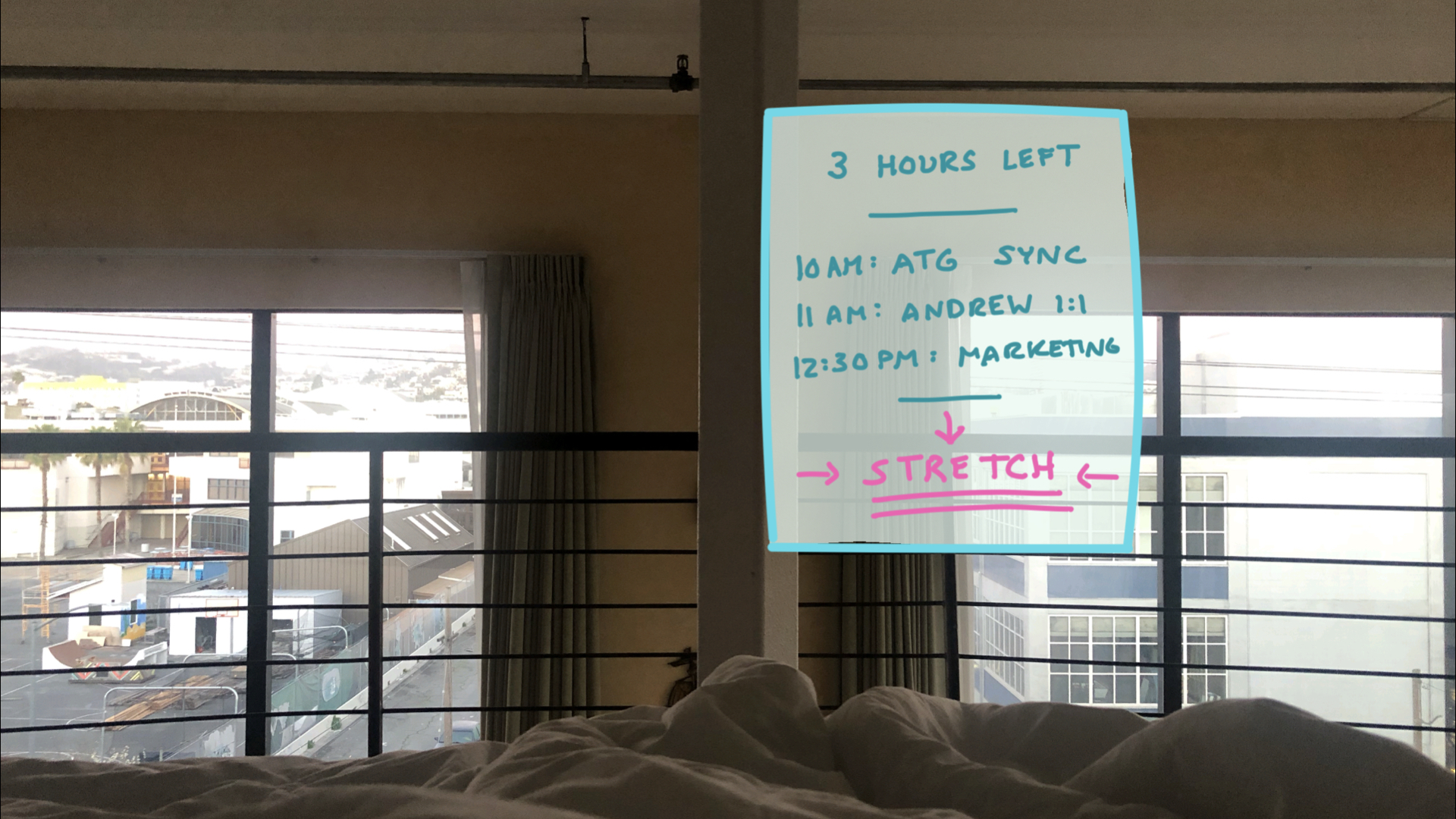

7am: okay now I am waking up for real. Usually the first thing I want to remind myself is what time I need to go to the office...

So I have this UI come up automatically first thing.

I know some folks want to start their day with meditation or coffee, without checking their device or thinking about work, but my schedule is too varied for that. So I set up this little customized data container that just tells me a few key things I want to know: how much time before I have to be to work, what the first couple meetings are, and a reminder to myself to STRETCH, which is what I will do now.

How am I seeing this? Depends on where we are in the future. Could be a permanent glass monitor, could be that I put on AR glasses right away, could be projector on a surface, could be brain implant.

BTW, notice the lights are off now. This actually happens today in my house because I have Hue lights set up to turn off at sunrise. The future is partially here!

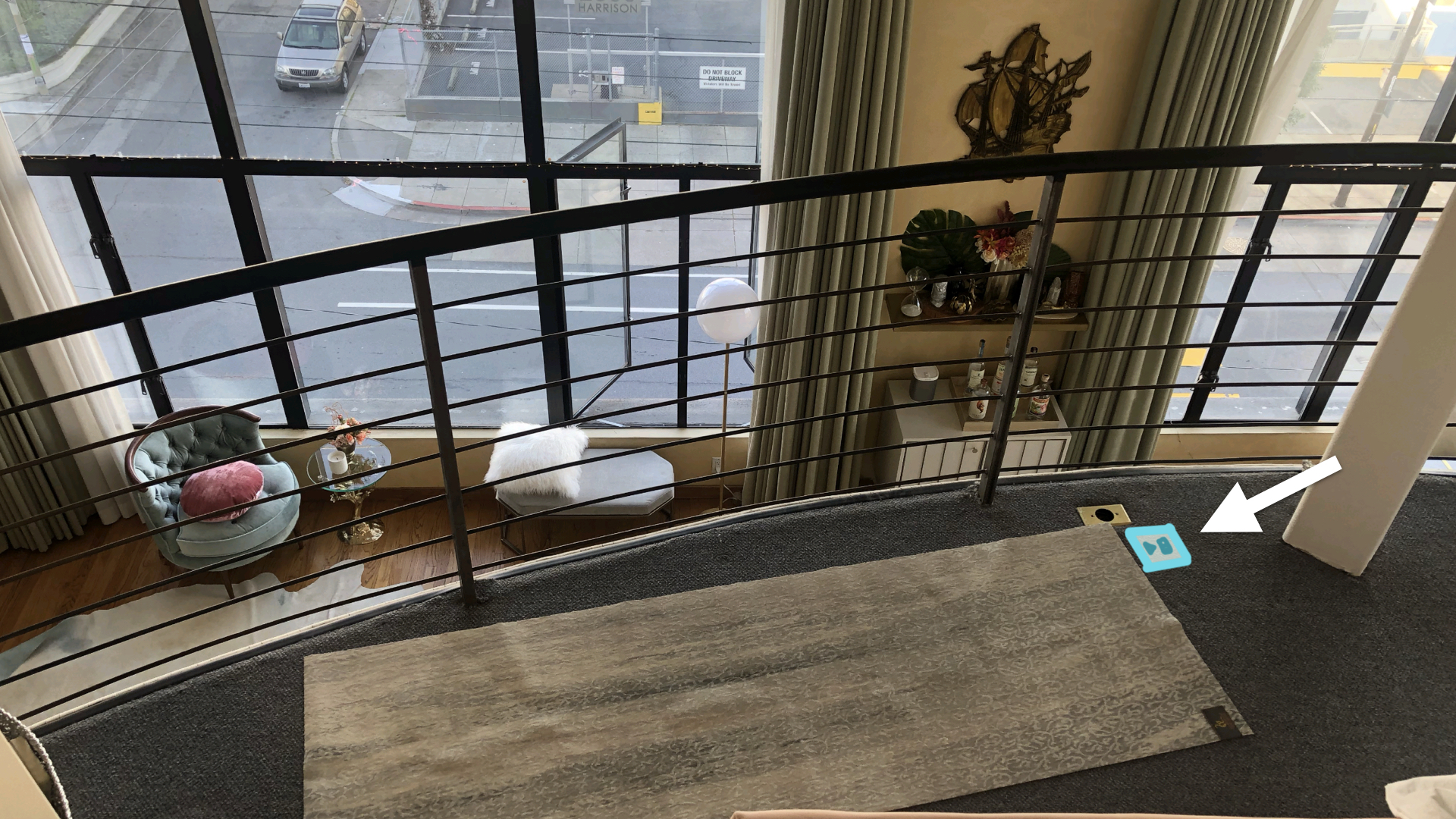

Okay so next, I roll out my yoga mat, which is the cue for the camera which watching me, or the motion sensor I am wearing, to start playing this meditation that I’ve picked out. I’ve got this tiny little UI pinned next to the yoga mat on the ground in case I want to pick something else or change the volume. Very minimal.

Conversely, if I look down I can see a Sonos speaker on the bar, so if I look at it and say “volume up” it knows to turn the volume up…

…and it gives me a visual confirmation of where the volume is now and where it was before. Then it goes away.

I also want coffee, and I set it up the night before to be ready to go in the morning. So if I look down at my coffee maker here…

I see a little UI that tells me how long till the coffee is ready. Importantly, it waited when it knew I was snoozing, so it needs to be tied to the alarm system.

If I want to pause the coffee maker, I can just say ‘pause’ and the computer that is my house will know what I’m talking about via a combination of head pose and gaze, or eye tracking, or whatever new tech we have available.

Let’s talk about the data layer and how it’s being surfaced here for a minute. I don’t need to know anything about the coffee maker here, other than how much time is left till it’s done. I can tell it’s already on because I can hear and smell it. The machine doesn’t need to tell me when it started because I don’t really care. If it told me that information only, I’d just have to do the math in my head to calculate what I want to know, which is: when can I drink it?

For truly useful computing we need to be extremely thoughtful about two things: good defaults, and letting users customize their computer in new ways. Here’s what we do not want.

I completely blame movies for this: I don’t want to see any interfaces like this around my coffee maker. Don’t do it. Don’t include little charts, don’t make me read words or link to buy things, and don’t include one of those little radar graphics even if they are in every scifi movie interface since 1962. Full disclosure: I did make this design. But don’t you do it! When you making coffee there is one thing you need to know: when can I drink it.

Data presentation is a meaty topic and worth discussing in depth—but perhaps that’s another topic for another day. Let’s get back to my morning.

After stretching, as the coffee is brewing, I go take a shower. The water is already running, because it takes a few minutes to warm up so it starts a few minutes before the meditation ends. There’s another Sonos in here I can control remotely the same way, with voice + gaze + transient UI.

In the shower, I want some kind of interface for a few reasons.

Maybe I want to look up something (like check my calendar again, or an email). I also often just think about things in the shower, so maybe I want to note them down for later. Or maybe I just want to put on some music. Doesn’t matter, point is there’s a touch responsive, voice responsive display in the shower that lets me do whatever.

I want to make it clear I am not suggesting a digital interface for the shower itself. Sure, maybe this interface tells me the actual temperature, and maybe I can control the shower from here as well, but I should always be able to turn on and take a shower without a computer interface. I do not want to have to wait for firmware updates to take a shower, and you don’t either.

The basic mechanical functions of non-computing devices should always be available without a computer interface. Straight up.

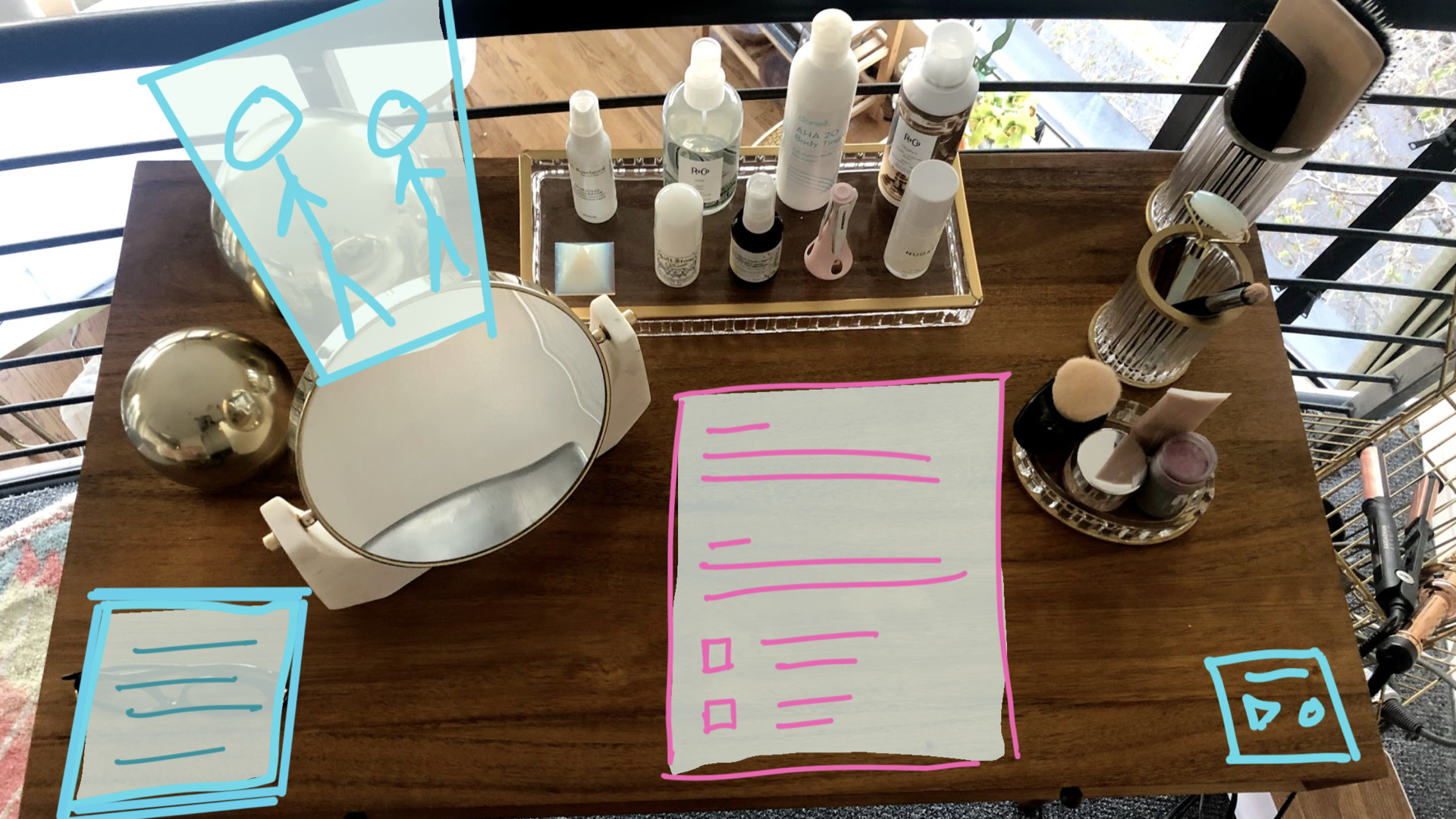

So after the shower I go get coffee, then I go over to this little desk and put on my makeup and do my hair. In my ideal future, the curling iron and straightener are already on. If I have some reminders or something they might be there too, hovering above.

Desk normally looks like this and every day I sit there checking slack, emails, doing general work stuff.

But what I would prefer is this.

Let’s walk through what we’re looking at here. On the right hand side is the same little music control interface that I had pinned to my yoga mat. It can be in both places at the same time, and is a customizable remote subset of a larger music app.

In the middle is a generic OS container inside which I can check my email and slack or browser or whatever.

On the left hand side is my favorite thing, a new app that doesn’t exist today. It’s a combination of clipboard, to-do list, and pastebin. I can pick up objects from other UI and put it in here for sorting, safekeeping and reference.

Above I have some image pinned for reference, or maybe I’m watching a video or something. Or maybe this is just a cool gif.

All of these UI containers are moveable and customizable; I set them up like this. All can be controlled via voice, gesture or touch. How many of you have been to Dynamicland? I highly recommend you go. Dynamicland is a huge experimental building-slash-computer interface that’s giant tracked rooms filled with paper you can write anything on to run programs all around you and change them however you want.

I’m imagining a very similar UX with cameras surrounding me and projectors above, or of course glasses if we’ve got them.

Now if you’ve ever seen one of my talks you know I love physical inputs. I don’t have them here because this isn’t heavy duty work that requires flow: this is basically some light swiping and dictation.

So this is visual, voice, gesture, touch, but no custom computer peripherals (by ‘custom’, I include things like keyboards & mice). Since there’s no real physical inputs or outputs, including haptics, I’d probably lean more heavily on audio confirm noises here in lieu of button presses, to help the user learn how to use the interface.

We’ll talk more about when to use specific types of inputs and outputs (also known as ‘modalities’) later in this series.

After my hair and makeup are done, I go get dressed, and this is usually when I want to know what the weather is like. So I say “Hey Siri” or “Hey Alexa” or “Hey Bruce,” if we finally achieve gender equality in home assistants. The spatial computer knows that I’m by my closet in the morning and haven’t asked about the weather yet, so they say, “Weather is 55º” because the weather is always 55º in San Francisco. It’s just a matter of whether it’s a cold 55 or warm 55.

No visuals needed because, unlike the volume, I don’t need to have the temperature contextualized. I know what 55º is like, so voice is fine. Then I turn to go down the stairs, and Alexa or Siri or Bruce says “Hey, you forgot your watch” because I always forget it on the nightstand. And I say ‘thank you’ and grab it.

As I go downstairs I see my router which is on top of this big cabinet thing. If I don’t really look at it, I don’t see anything...

But if I do happen to glance at it, it gets a bit of a hue so I know I can interact with it to learn some more information...

And then it pops up something useful if there is anything useful, like current speed, how much my bill is, what have you.

Okay, I think you get the idea.

Hopefully now you have a sense of what I mean when I say spatial computing: untethered from any specific piece of hardware, reactive, listening to you. You probably noticed that a lot of this didn't feel like the Matsuda video, like you’re a prisoner in an augmented world. It felt much more like really good internet of things.

That’s the right idea. Spatial computing is about computers…in space. Some paradigms transfer gracefully. The written word is inherently 2D; we’ll always want screens or canvases for that. But other stuff can be hidden or accessed via different ways, different devices, and different modalities.